The Unseen Engine: What Exactly is a CPU and Why Does It Matter to You?

In the complex world of technology and finance, understanding the foundational elements can make a significant difference. Think of a computer, a smartphone, or even a smart appliance. What is the core driving force behind its ability to function, to follow your commands, and to process information? At its heart lies a marvel of modern engineering: the Central Processing Unit, or CPU.

Often referred to as the “brain” or the “control center” of any electronic device, the CPU is an intricate electronic circuit. Its primary responsibility is to carry out instructions from software programs and process the data those programs use. Without a CPU, your device is just a collection of inert parts. It’s the CPU that breathes life into the hardware, enabling it to perform tasks, run applications, and connect you to the digital world. As investors and traders navigating a tech-driven landscape, understanding this fundamental component isn’t just for computer enthusiasts; it’s crucial for grasping the engine that powers innovation and shapes market trends.

We interact with CPUs constantly, often without realizing it. Every click, every swipe, every calculation, every graphic rendered on your screen is a direct result of the CPU performing countless operations per second. Its performance directly impacts how quickly your applications launch, how smoothly you can multitask, and the overall responsiveness of your device. As we delve deeper, you’ll see how understanding the CPU, its capabilities, and the market dynamics surrounding it can provide valuable insights, particularly if you’re looking at tech-related investments.

So, let’s pull back the curtain and explore this indispensable component. What is it made of? How does it actually process information? How do we measure its power, and why does its design matter so much? By the end of this exploration, you’ll have a solid grasp of the CPU’s critical role, transforming it from an abstract term into a concrete concept vital for understanding the technological backbone of the modern economy.

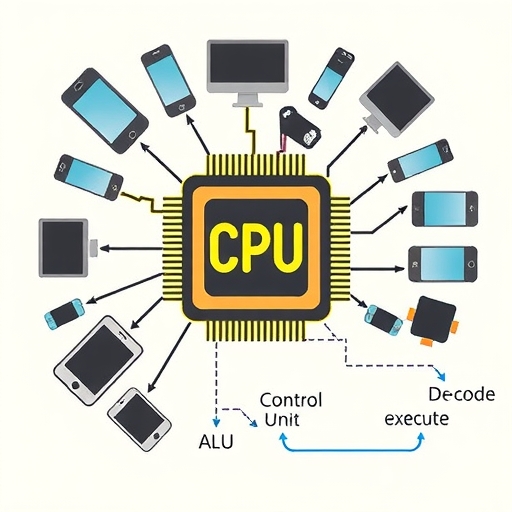

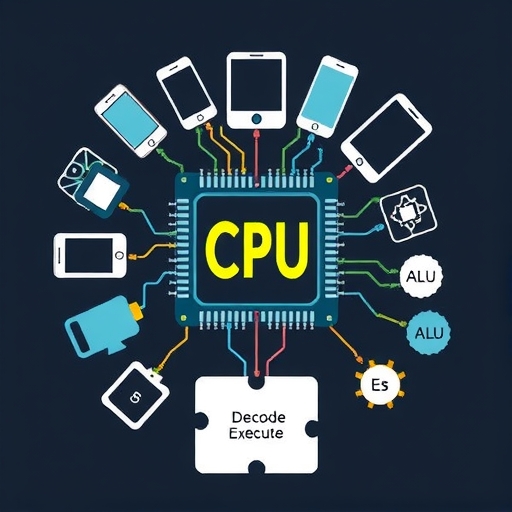

Just as the human brain has different regions responsible for specific functions, the CPU is composed of several specialized components that work together harmoniously to execute instructions. While the sheer complexity of a modern CPU, containing billions of transistors, is astounding, we can break down its core functional units to understand its operation. Think of these as the specialized departments within our high-performance engine.

What are these crucial parts? Let’s identify some of the most significant ones:

- Control Unit (CU): This is often considered the conductor of the CPU orchestra. The Control Unit is responsible for managing and coordinating the flow of data within the CPU and between the CPU and other components of the computer, such as memory and input/output devices. It fetches instructions from memory, determines what action is required, and directs other components to perform the necessary operations. It doesn’t perform the actual operations itself but tells everyone else what to do and when.

- Arithmetic/Logic Unit (ALU): This is the CPU’s calculator and decision-maker. The ALU performs all the arithmetic operations (like addition, subtraction, multiplication, division) and logical operations (like comparisons: is A greater than B? Are X and Y the same?). When the Control Unit fetches an instruction that requires calculation or comparison, it sends the data and the required operation to the ALU for processing. The results are then sent back for further steps.

- Memory Unit (or interfaces to Memory): While the CPU has its own internal memory components (like cache and registers), it constantly needs to access the computer’s main memory (RAM). The CPU interacts with the Memory Unit to fetch instructions and data and to store results temporarily. Think of RAM as the CPU’s short-term workspace, and the CPU needs efficient ways to get information in and out of it quickly.

| Component | Function |

|---|---|

| Control Unit (CU) | Manages and coordinates data flow within the CPU and with other components. |

| Arithmetic/Logic Unit (ALU) | Performs arithmetic and logical operations. |

| Memory Unit | Interacts with RAM for fetching and storing data. |

Understanding these core components gives you a better appreciation for the complexity packed into that small chip. They don’t operate independently; they work in perfect concert, orchestrated by the Control Unit, to execute the instructions given by software.

Now that we know the components, how do they work together to make the computer do anything? The CPU’s fundamental operation revolves around a continuous loop known as the Fetch-Decode-Execute Cycle. This cycle is the heartbeat of the CPU, repeating billions of times per second to carry out the instructions written in the software programs you use.

Let’s break down this cycle:

- Fetch: The cycle begins with the Control Unit fetching the next instruction from the computer’s main memory (RAM). The address of this instruction is stored in the Program Counter register. The instruction is copied from memory into the Instruction Register, and the Program Counter is updated to point to the next instruction in sequence. Think of this as picking up the next step from a recipe book (memory) and putting it on your counter (Instruction Register).

- Decode: Once the instruction is in the Instruction Register, the Control Unit decodes it. This means translating the instruction into commands that the other parts of the CPU can understand. The Control Unit determines what operation needs to be performed (e.g., addition, comparison, data movement) and which components (like the ALU) will be needed to perform it. It’s like reading the recipe step and figuring out “Okay, this says ‘add sugar’, so I’ll need the sugar, the bowl, and I need to tell my hands (the ALU) to perform the ‘add’ action.”

- Execute: In this final stage, the CPU performs the operation specified by the instruction. If it’s an arithmetic or logic operation, the Control Unit sends the data and the necessary command to the ALU, which performs the calculation or comparison. If it’s a data movement instruction, the Control Unit directs the data to be moved between registers, memory, or I/O devices. The results of the execution might be stored in registers or written back to memory. This is where you actually perform the recipe step – adding the sugar to the bowl.

| Cycle Step | Description |

|---|---|

| Fetch | Retrieve the next instruction from memory. |

| Decode | Translate the instruction into executable commands. |

| Execute | Perform the operation specified by the instruction. |

Once the Execution phase is complete, the CPU immediately starts the cycle again, fetching the next instruction pointed to by the updated Program Counter. This relentless, high-speed cycle is what allows your computer to perform millions or billions of operations every second, giving the illusion of simultaneous activity when, at a fundamental level, the CPU is tackling tasks one instruction at a time, just incredibly fast.

Understanding this basic cycle is key to appreciating the sheer volume of work a CPU does and how its speed and efficiency directly translate into the performance you experience when using a device.

Measuring Muscle: How CPU Performance is Determined

When you look at CPU specifications, you’re often presented with a set of numbers and terms: gigahertz, core counts, cache sizes. These aren’t just random figures; they are critical indicators of a CPU’s performance capability. But what do they actually mean, and how do they combine to give you that overall sense of speed and power?

Let’s break down the primary factors that determine how well a CPU performs:

- Clock Speed (Frequency): Measured in Hertz (Hz), Kilohertz (kHz), Megahertz (MHz), or most commonly today, Gigahertz (GHz), the clock speed represents the number of cycles the CPU’s internal clock generates per second. A 3 GHz CPU completes 3 billion cycles per second. As we learned, each cycle is a beat that allows the CPU to perform operations, often completing one or more instructions per cycle (though it’s not a simple 1:1 ratio due to architectural efficiencies). All else being equal, a higher clock speed means the CPU can process instructions faster. Think of it as the engine’s maximum RPM – higher RPM generally means more potential power output.

- Number of Cores: Modern CPUs typically have multiple “cores.” A core is essentially a complete processing unit within the CPU, capable of performing the Fetch-Decode-Execute cycle independently. A dual-core CPU has two such units, a quad-core has four, and so on. Having multiple cores is like having multiple workers available. This significantly enhances the CPU’s ability to handle multiple tasks simultaneously (multitasking) or to run software programs that are designed to divide their workload among several cores (multithreading). While higher clock speed makes a single task potentially run faster, more cores allow the CPU to juggle many tasks more efficiently.

- Cache Size: We discussed cache memory. The size of the different cache levels (L1, L2, L3) directly impacts performance. A larger cache can store more frequently used data and instructions, reducing the need for the CPU to fetch data from slower main memory (RAM). Accessing data from cache is exponentially faster than accessing RAM, so a larger, effective cache can dramatically improve the speed at which the CPU can access the information it needs, thus speeding up processing.

When evaluating a CPU’s performance, you need to consider the interplay of all these factors. A high clock speed is good, but many cores and a large cache are also vital, and a modern, efficient architecture can leverage all of these elements most effectively. Understanding these metrics allows you to interpret CPU specifications and anticipate how well a device will perform for different types of tasks.

Performance in Practice: What These Metrics Mean for You

Alright, we’ve talked about clock speeds, cores, cache, and architecture. But what does that actually translate to when you’re using your computer, playing a game, or running sophisticated trading software? Connecting these technical specifications to real-world experience is key, both for choosing the right device for your needs and for understanding the value of different CPU generations in the market.

A more powerful CPU, defined by the combination of the factors we discussed, generally means a better user experience across various applications. Here’s how CPU performance directly impacts what you do:

- Faster Application Loading: When you click to open a program, the CPU has to fetch its instructions and data from storage and memory and begin executing them. A faster CPU can complete this initial process much more quickly.

- Smoother Multitasking: If you’re like most people, you probably have multiple applications open at once – a web browser with many tabs, an email client, a spreadsheet, and perhaps a chat application. A CPU with more cores and efficient architecture can switch between or run these tasks more effectively, preventing the system from feeling sluggish or unresponsive when you jump from one application to another.

- Improved Responsiveness: Overall system responsiveness – how quickly your computer reacts to your mouse clicks, keyboard input, and other commands – is heavily dependent on the CPU. A faster CPU ensures there’s less delay between your action and the computer’s reaction.

| Impact Area | Effect of CPU Performance |

|---|---|

| Application Loading | Faster completion of loading processes. |

| Multitasking | More efficient handling of multiple applications. |

| System Responsiveness | Reduces delay in user commands. |

Have you ever used an old computer and felt frustrated by how slow it was? That frustration was likely a direct result of an older, less powerful CPU struggling to keep up with the demands of modern software. Conversely, upgrading to a system with a significantly better CPU often feels like a dramatic improvement in usability and speed, making everyday tasks feel effortless.

For traders, especially those using platforms that run complex algorithms, backtesting strategies on historical data, or relying on real-time data analysis tools, CPU performance is critically important. A powerful CPU can execute these calculations faster, potentially saving valuable time and allowing for more complex analysis.

Different Engines for Different Vehicles: Exploring CPU Architectures

Not all CPUs are built the same way, even if they perform the same fundamental task of executing instructions. The underlying design philosophy, known as the Instruction Set Architecture (ISA) and the corresponding microarchitecture, leads to different types of CPUs optimized for different purposes. The two dominant architectures you’ll encounter are x86/x64 and ARM.

Think of it like vehicles: both a powerful semi-truck and a fuel-efficient scooter are designed to transport things, but they are built very differently to serve distinct needs.

- x86/x64 Architecture:

- Developed By: Primarily associated with Intel and AMD.

- Design Philosophy: Historically focused on performance and compatibility with a vast library of legacy software. It uses a Complex Instruction Set Computing (CISC) approach, meaning instructions can be quite complex, performing multiple low-level operations with a single instruction.

- Typical Use Cases: Dominant in desktop computers, laptops (though ARM is gaining traction), workstations, and especially servers (datacenter and cloud computing). These are environments where high performance, raw processing power, and running demanding software are paramount.

- Characteristics: Generally consume more power and generate more heat compared to ARM CPUs, but traditionally offer higher peak performance for single-threaded or moderately multithreaded tasks, particularly for complex desktop applications.

- ARM Architecture:

- Developed By: ARM Holdings (though licensed to many other companies like Apple, Qualcomm, MediaTek, Samsung, etc., who design their own chips based on the ARM architecture).

- Design Philosophy: Historically focused on power efficiency and cost-effectiveness. It uses a Reduced Instruction Set Computing (RISC) approach, where instructions are simpler and require fewer clock cycles, but more instructions might be needed to perform the same task as a CISC instruction. This simplicity often leads to more power-efficient designs.

- Typical Use Cases: Dominant in mobile devices (smartphones, tablets), embedded systems (IoT devices, smart TVs, automotive), and increasingly making inroads into laptops and servers (e.g., Apple’s M-series chips, AWS Graviton). These are environments where battery life, thermal management, and energy efficiency are critical.

- Characteristics: Offer excellent performance per watt of power consumed. While individual simple instructions might be faster, achieving high peak performance often relies on very high clock speeds or many cores, though recent ARM designs have become incredibly powerful, challenging the traditional performance lead of x86 in some areas.

The choice of architecture dictates the types of devices a CPU is best suited for and influences factors like battery life, cooling requirements, and the software compatibility. While x86 has long been the standard for personal computing and servers, ARM’s focus on efficiency has made it ideal for the mobile revolution and is now making significant waves in areas traditionally dominated by x86, driving innovation and competition in the CPU market.

From Vacuum Tubes to Billions: The CPU’s Remarkable Evolution

To truly appreciate the modern CPU, it’s helpful to glance back at its origins. The concept of a central processing unit performing sequential instructions is not new, but its physical form has undergone a staggering transformation, shrinking from room-sized behemoths to fingernail-sized chips.

The earliest ancestors of the CPU can be traced back to the very first electronic computers. One notable example is the ENIAC (Electronic Numerical Integrator and Computer), completed in 1946. ENIAC was a massive machine, weighing 30 tons and filling a large room, using thousands of vacuum tubes to perform calculations. It wasn’t a single integrated CPU as we know it, but rather a collection of processing units that could be programmed (albeit with physical rewiring) to execute sequences of instructions. These early machines were groundbreaking but slow, prone to failure (vacuum tubes burned out frequently), consumed enormous amounts of power, and were incredibly expensive.

The invention of the transistor in 1947 and later the integrated circuit (IC) in the late 1950s and early 1960s marked a pivotal turning point. Integrated circuits allowed multiple transistors and other electronic components to be fabricated onto a single piece of semiconductor material (silicon). This invention paved the way for the creation of the microprocessor – an entire CPU implemented on a single IC chip.

The first commercial single-chip microprocessor was the Intel 4004, released in 1971. This marked the true beginning of the modern CPU era. Compared to ENIAC, the 4004 was minuscule, consuming vastly less power, far more reliable, and eventually, orders of magnitude cheaper to produce. While primitive by today’s standards (it was a 4-bit processor running at a clock speed of only 740 kHz), it demonstrated the immense potential of putting the CPU on a single chip.

From that point, CPU development has been a relentless march of progress, often following Moore’s Law, the observation that the number of transistors on an integrated circuit roughly doubles every two years. This has led to CPUs becoming exponentially smaller, faster, more powerful, and more power-efficient over decades.

We’ve seen the transition from 4-bit to 8-bit, 16-bit, 32-bit, and now widely 64-bit architectures (hence x64). Clock speeds soared from kilohertz to megahertz and then gigahertz. The introduction of multiple cores became standard. Cache sizes grew from kilobytes to megabytes. New architectures and specialized instruction sets were developed to handle increasingly complex tasks.

This incredible evolution has made computing ubiquitous, powering everything from supercomputers to the tiny chips in your smart toothbrush. Understanding this journey from room-sized calculators to pocket-sized supercomputers helps frame the constant innovation and competitive drive within the CPU market.

The Global CPU Market: Players, Growth, and Investment Significance

Given the CPU’s foundational role in virtually all technology, it’s no surprise that the global CPU market is enormous and a critical sector within the broader technology industry. For investors and traders, understanding this market means understanding a key driver of growth, competition, and innovation in tech.

The CPU market is substantial and projected for significant growth. According to market analysis, the global CPU market was valued at tens of billions of U.S. dollars and is expected to grow at a Compound Annual Growth Rate (CAGR) of over 10% in the coming years, potentially reaching over $100 billion by 2030. This growth is fueled by several factors:

- Increasing demand for computing power from data centers and cloud computing services.

- The proliferation of Artificial Intelligence and machine learning workloads, which require powerful processors.

- The continued growth of the gaming industry, demanding higher-performance desktop and laptop CPUs.

- The expansion of the Internet of Things (IoT), putting small, often ARM-based CPUs into billions of connected devices.

- The refresh cycle of personal computers and enterprise hardware.

Who are the key players in this massive and competitive market? The landscape varies slightly depending on the segment (desktop, server, mobile, embedded), but some names consistently dominate:

- Intel: Historically the dominant player in the x86 desktop, laptop, and server markets. Intel has been a CPU powerhouse for decades, driving many of the architectural advancements in the x86 space. They design and manufacture their own chips.

- AMD (Advanced Micro Devices): The primary competitor to Intel in the x86 desktop, laptop, and server markets. In recent years, AMD has gained significant market share with its competitive Ryzen (desktop/laptop) and EPYC (server) processor lineups, challenging Intel’s long-held dominance through innovative architecture and performance. Like Intel, they design and manufacture (though they also utilize third-party fabs) their chips.

- ARM Holdings: While not manufacturing CPUs themselves for the most part, ARM is arguably the most influential player in terms of architecture design, particularly in the mobile and embedded space. They design the ARM instruction set architecture and core designs, which they license to other companies.

- Other Manufacturers (Often ARM Licensees): Many companies design and manufacture their own chips based on ARM licenses. Prominent examples include Apple (with their highly successful M-series chips for Macs and A-series for iPhones/iPads), Qualcomm (dominant in mobile processors with their Snapdragon series), and MediaTek (another major player in mobile and embedded chips). These companies are significant manufacturers in specific segments, leveraging the power-efficient ARM architecture.

The competition between these players, particularly the ongoing rivalry between Intel and AMD in the x86 space and the rise of high-performance ARM designs from companies like Apple, is a major dynamic in the market. This competition drives innovation, pushes performance boundaries, and influences pricing strategies, all of which are important factors for investors tracking the semiconductor and technology sectors.

Why the CPU Market Matters for Investors and Traders

So, you might be asking, “Why should I, an investor or trader focused on financial markets or perhaps technical analysis, care about the intricacies of CPU technology?” The answer lies in the CPU’s role as a fundamental building block of the digital economy and its direct connection to the performance and value of major technology companies.

The CPU is not just a component; it’s an enabler of technological progress. The increasing need for faster, more efficient processing power is a direct driver of growth in several key areas:

- Cloud Computing: Cloud infrastructure relies heavily on vast data centers filled with servers, each powered by multiple high-performance CPUs (primarily x86 from Intel and AMD, with increasing adoption of ARM-based solutions). As cloud adoption grows, so does the demand for server CPUs, making companies in this segment key players in the tech investment landscape.

- Artificial Intelligence & Machine Learning: Many AI and ML workloads require significant compute resources. While specialized hardware like GPUs (Graphics Processing Units) is often used for training AI models, CPUs are essential for data preparation, running inference (using a trained model), and managing the overall system. Advances in CPU design, including specialized AI instruction sets, are critical for the continued growth of AI applications.

- 5G and Edge Computing: The rollout of 5G and the move towards processing data closer to its source (edge computing) require powerful, often energy-efficient processors deployed in diverse locations, from base stations to IoT gateways. ARM-based CPUs are particularly relevant in this space.

- Gaming and Entertainment: The booming video game industry drives demand for powerful CPUs in gaming PCs and consoles, constantly pushing the need for higher clock speeds and more cores to render complex virtual worlds.

- Innovation Across Sectors: From autonomous vehicles and robotics to advanced medical imaging and scientific research, nearly every field benefiting from technological advancement relies on increasingly sophisticated computing power provided by CPUs.

For investors, companies like Intel, AMD, and ARM (or companies leveraging ARM designs like Apple and Qualcomm) are major players whose performance is directly tied to the health and growth of the CPU market and the technological trends it supports. Understanding the competitive landscape, architectural shifts (like the rise of ARM in traditional x86 strongholds), and the underlying demand drivers provides crucial context when evaluating these stocks or the broader semiconductor industry.

Moreover, many software companies, cloud providers, and device manufacturers (like Dell, HP, Lenovo, Apple) are heavily reliant on CPU technology. Their ability to innovate and compete is often linked to the CPUs they use. A shortage of CPUs, a significant performance leap by a competitor, or a major architectural shift can have ripple effects throughout the tech ecosystem, impacting company performance and stock valuations.

In essence, the CPU market is a barometer for the health and direction of the entire technology sector. Staying informed about CPU developments and market dynamics is a strategic advantage for anyone investing in or trading technology stocks.

Optimizing Performance: Beyond the Hardware

While the CPU’s hardware specifications are the foundation of performance, the story doesn’t end there. Just as a powerful engine needs a skilled driver and well-tuned systems to perform at its best, a CPU relies on software and other factors to deliver its full potential. Understanding these elements is crucial for users looking to maximize their device’s speed and for investors appreciating the full picture of system performance.

What else impacts how well a CPU performs in your day-to-day tasks?

- Operating System (OS) Efficiency: The operating system (like Windows, macOS, Linux, Android) is the primary software that interacts directly with the CPU. A well-optimized OS can schedule tasks efficiently across multiple cores, manage memory effectively, and utilize the CPU’s advanced features and instruction sets. An inefficient OS or one poorly matched to the hardware can limit even a powerful CPU’s performance.

- Drivers: Drivers are software programs that allow the operating system to communicate with hardware components, including the CPU itself (via chipset drivers), graphics card drivers (which often work closely with the CPU), and others. Outdated or poorly written drivers can create bottlenecks, causing the CPU to wait or perform unnecessary work, thereby reducing overall system performance. Keeping drivers updated is essential.

- Software Optimization: The efficiency of the applications you run significantly impacts CPU usage. Well-optimized software is written to make the best use of the CPU’s capabilities, leveraging multiple cores, caching, and specific instruction sets when possible. Poorly optimized software might only use one core, perform inefficient calculations, or access memory in ways that create bottlenecks, leading to high CPU usage but slow performance.

| Factor | Impact on Performance |

|---|---|

| Operating System Efficiency | Affects task scheduling and memory management. |

| Drivers | Can create bottlenecks if outdated. |

| Software Optimization | Efficient software provides better CPU utilization. |

Understanding these factors provides a holistic view of performance. A high-end CPU is a necessary but not sufficient condition for a fast computer. The software environment and supporting hardware must also be optimized to allow the CPU to perform at its peak. For investors evaluating tech companies, considering how they optimize their software or hardware designs to leverage CPU capabilities is part of understanding their overall product competitiveness.

The Foundation of Innovation: The CPU’s Enduring Role

We’ve journeyed from the fundamental definition of a CPU and its internal workings to its evolution and impact on the global technology market. It’s clear that the Central Processing Unit is far more than just a chip on a circuit board; it is the fundamental engine driving the digital age.

We’ve seen how its core function of executing instructions, supported by specialized components like the ALU and Control Unit operating through the Fetch-Decode-Execute cycle, underpins every task your devices perform. We’ve dissected the metrics – clock speed, cores, cache, architecture – that define its power and explored how these translate into the speed and responsiveness you experience every day.

Understanding the architectural differences between x86/x64 and ARM reveals how CPUs are tailored for diverse applications, from power-hungry servers to energy-sipping smartphones, shaping the landscape of devices available to us. And by tracing its history from giant, unreliable vacuum tube machines to integrated microprocessors containing billions of transistors, we gain perspective on the incredible pace of technological advancement driven, in large part, by CPU innovation.

Finally, we connected the technical details to the broader economic picture, highlighting the significant and growing global CPU market, the intense competition between major players like Intel, AMD, and those leveraging ARM designs, and why this market is critically important for investors and traders tracking the technology sector. The CPU market’s health is intertwined with the growth of cloud computing, AI, IoT, and countless other technologies that are reshaping our world.

Whether you are a beginner investor trying to grasp the tech industry, a trader looking for insights into semiconductor stocks, or simply someone who uses electronic devices daily, a deeper understanding of the CPU provides valuable context. It reveals the intricate processes happening invisibly, the relentless innovation driving performance, and the economic forces at play in a critical segment of the global economy.

The CPU remains at the forefront of technological development. As demands for processing power continue to grow with the rise of more sophisticated AI, immersive virtual and augmented reality, and increasingly complex simulations, the drive for faster, more efficient, and more specialized CPUs will continue unabated. The chip that started as a room full of vacuum tubes will undoubtedly continue to evolve, powering the next wave of innovation and remaining a cornerstone of the digital future.

By understanding the CPU, you are not just learning about a piece of hardware; you are gaining insight into the fundamental engine of progress that impacts technology, business, and our daily lives in countless ways. It is a powerful piece of knowledge to carry forward as you navigate the ever-evolving landscape of technology and investment.

cpudefinitionFAQ

Q:What does CPU stand for?

A:CPU stands for Central Processing Unit, which is the primary component of a computer that processes instructions.

Q:How does CPU performance affect my computer?

A:The CPU’s performance impacts how quickly applications run, how smoothly you can multitask, and the overall responsiveness of the device.

Q:What are the main factors that determine CPU performance?

A:The main factors include clock speed, number of cores, cache size, and architecture type.